When “AI Is Bad” Becomes a Course Catalog

How institutions build programs faster than we form questions

When “AI Is Bad” Becomes a Course Catalog

How institutions build programs faster than we form questions

I was at a board game night hosted by someone I barely knew. One of those evenings where no one explains the rules, everyone's drinking herbal tea from mugs that aren't theirs, and half the group are friend-adjacent strangers vaguely aware of each other's jobs.

A woman walked in and said flatly, to no one in particular: "AI is bad."

That was it. We looked up. Not in challenge—just expectation. As if to say—go on. The polite pause you give someone when you expect a second sentence.

She gave us one: "That's why I'm going back to school. To fix it."

Not elaboration. Not context. Just a take and a tuition payment.

She said the program's name—something with “artificial” and “systems,” and probably the word "human" in it. Or maybe it was “autonomous”? I asked twice more and never caught it. One of those freshly built degrees with at least 17 syllables, three hyphens, and a dangling modifier.

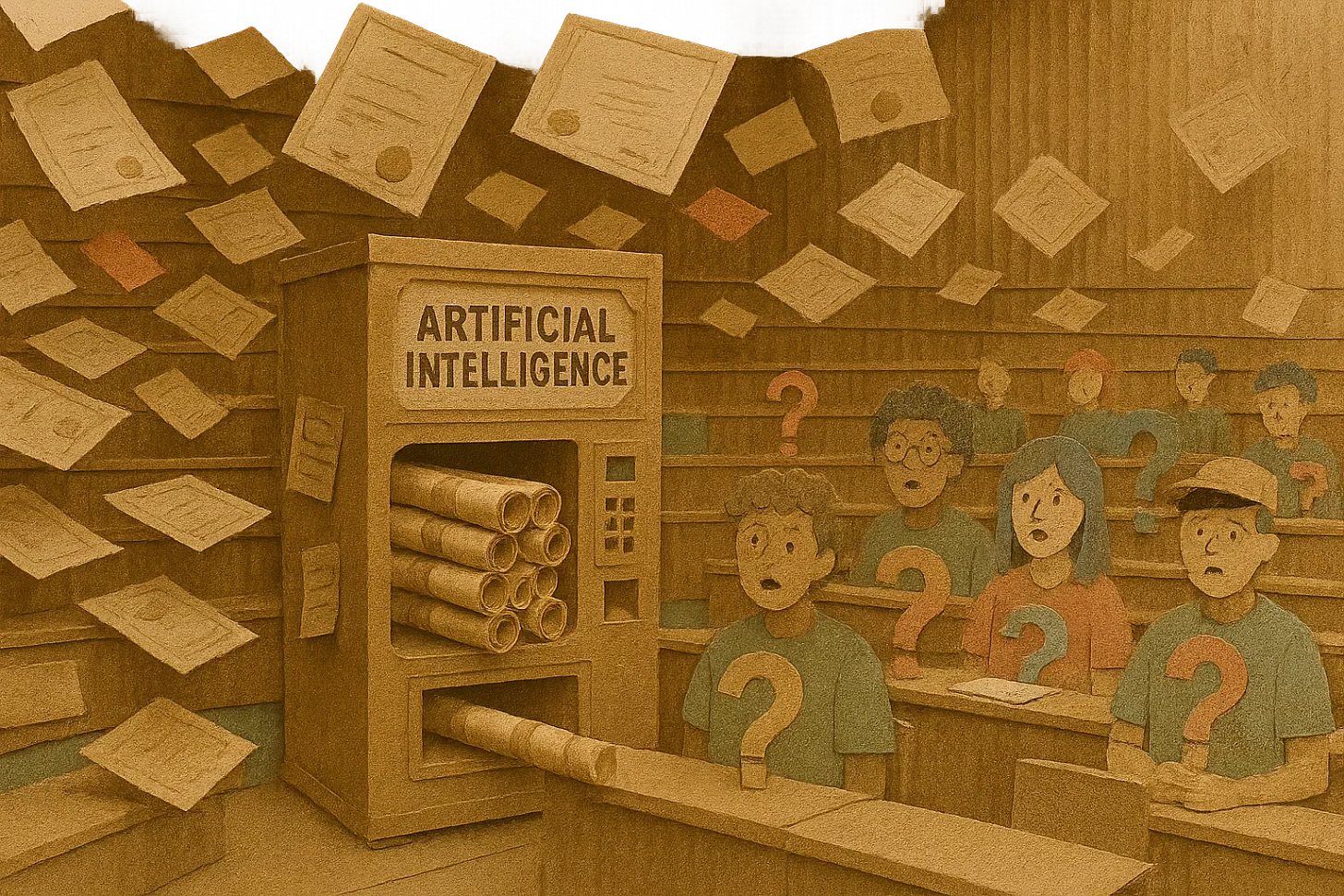

Academic merch for a band that hasn't released an album yet, but the moment captured something broader: we frequently institutionalize responses to emerging ideas about technologies before articulating what we're trying to achieve. Media hype and institutional responses race ahead of understanding, creating structures before we've done the hard work of articulation. It's like building amusement park rides based on a movie nobody's seen.

Following the corporate scandals of the early 2000s, "servant leadership" transformed into a billion-dollar industry. Consultants ran trainings. Books were written. Universities created executive programs. All while the term remained ambiguous enough that almost any behavior could "pass.”

After the 2008 financial crisis, we saw the same with "sustainability" – spawning certificate programs and consultancies without clear definitions. Similarly, after 2020, "DEI" initiatives began following this pattern of rapid institutionalization before clear articulation. Sentiment hardens into structure before passing through the crucible of articulation—the institutional equivalent of buying furniture for a house whose architectural plans consist entirely of vibes.

This pattern has three key components:

The evolution of language - when words lose specific meaning, allowing vague considerations to seem universally relevant

The commercialization and media hype - the gold rush around emerging concepts

The psychology of binary thinking - how unelaborated statements like "AI is bad" gain traction and drive action

The Evolution of Language

When Words Float Free

The power of a statement like "AI is bad" is amplified by language becoming unmoored from specific meanings in technological discourse.

"Artificial intelligence" has become a floating signifier, disconnected from technical specificity. It's the "gluten" of tech discourse—everyone's avoiding it, few can define it, and most people talking about it couldn't pick it out of a lineup if their Series A depended on it. The term has the remarkable property of sounding profitable to venture capitalists and mostly terrifying to almost everyone else—like quantum physics for people who think quantum physics explains why their horoscope was right that one time.

For some, "AI" means narrow machine learning algorithms. For others, the looming specter of artificial general intelligence. For others still, an entire sociotechnical system of data collection and decision-making. It's a Rorschach test administered via PowerPoint—everyone nodding while seeing entirely different patterns, except this inkblot test results in organizational restructuring.

Other terms follow similar patterns:

"Algorithm" has transformed from a specific mathematical procedure to a catch-all for automated decisions, the way "chemical" transformed from a neutral scientific term to meaning "definitely causes cancer"—as if there's a sentient line of code somewhere deciding your credit score out of spite

"Ethics" has become detached from philosophical traditions, functioning primarily as institutional air freshener – spray some around when things start to smell. Just spritz a little on your privacy policy and voilà—freshly laundered moral considerations, no philosophy degree required

The real genius of "artificial intelligence" isn't how precisely it captures a technological phenomenon, but how imprecisely it does so while still sounding technical enough to generate research funding, academic certifications, and billable hours for management consultants.. It's a linguistic Swiss Army knife of fundraising utility—simultaneously useful for confounding policymakers, impressing investors, and justifying academic departments whose faculty can't quite agree on what they're studying but are absolutely certain there should be more of them studying it.

The Commercialization and Media Hype

The Paradox of Premature Expertise

What's striking about our institutional response is the paradox at its core: we're creating experts before establishing what they should be expert in. The credential precedes the discipline. We're certifying knowledge before establishing its epistemic foundations. The cap and gown come before anyone's figured out what we're celebrating, but the tuition checks have definitely cleared.

The danger isn't simply that these programs might be premature. It's that they might actively obstruct more substantive understanding by creating an illusion of epistemic progress. Once credentials exist, they develop gravitational pull – resources and legitimacy flow toward them and away from alternative approaches. When legitimacy is uncertain, institutions move fast not to lead but to not be left behind—academic FOMO as a pedagogical principle. No one wants to be the last university to certify expertise in tomorrow's panic.

I suppose there's a certain perverse efficiency to creating credentials for problems we haven't defined yet. It's a bit like prescribing medication before diagnosing the illness—inefficient for medicine perhaps, but remarkably efficient for pharmaceutical sales. We've industrialized uncertainty-reduction without bothering to reduce any actual uncertainty.

How Ambiguity Becomes Institutional Structures

Peter Berger and Thomas Luckmann's sociology of knowledge provides insight into how simplified statements translate into concrete institutional actions—such as enrolling in multi-syllabic, grammatically questionable degree programs.

In "The Social Construction of Reality," they describe how subjective impressions become objective social facts through three phases:

Externalization: People express subjective concerns about AI through statements, articles, conversations—"I saw someone use ChatGPT once and it seemed creepy"

Objectivation: These expressions are repeated, collected, categorized until they appear as objective facts rather than interpretations—"Studies show widespread concern about AI."

Internalization: New generations encounter these "facts" as given reality rather than constructed meaning—"Of course we need AI programs; why else would there be so many AI programs?"

This process transforms the vague sentiment "AI is bad" into apparently objective claims, like "AI poses significant ethical challenges that require specialized training." Once objectified, these claims demand institutional responses. It's how a feeling becomes a course catalog entry – emotions becoming paperwork, anxiety becoming accreditation, and vague discomfort becoming very specific revenue.

The institutional world creates what Berger and Luckmann call "legitimations" – explanations connecting now-objective problems to specific solutions. A university might legitimize a new AI program by citing "growing concerns" and "urgent needs" without specifying what those concerns or needs are. The urgency, you'll note, always aligns perfectly with the fall semester's tuition deadline—a remarkable cosmic coincidence.

The Gold Rush Effect: Monetizing Ambiguity

This rapid commercialization is what business theorists might call a "gold rush effect" – where ambiguous concepts create market opportunities that institutions rush to exploit. It's not that everyone is panicking about AI; it's that everyone is figuring out how to cash in on the conversation. The only thing moving faster than machine learning advancements is the speed at which people are establishing careers explaining why we should be concerned about them.

The academic response follows a predictable template. Programs emerge overnight promising to be "future-proof," "first in the state," and "responding to urgent societal needs." University websites feature the same constellation of buzzwords: "ethical foundations," "cross-sector partnerships," "responsible innovation," "strategic advantage" – all without specificity. Program names stretch to 17+ syllables, centers get named after tech billionaires, and hiring plans ambitiously propose adding 60+ faculty within impossibly short timeframes. It's institutional Mad Libs where every blank gets filled with "transformative," "interdisciplinary," or "paradigm-shifting."

Many AI ethics positions function as what anthropologist David Graeber calls "box-tickers" – roles creating the appearance of oversight without substantively changing organizational practices. Ethics positions often deflect regulatory pressure while maintaining the status quo. It's an institutional rain dance – elaborate, ceremonial, completely disconnected from actual weather patterns, but don't worry, someone's getting tenure for it. We've invented the professional equivalent of a placebo—positions that look like oversight, feel like oversight, but somehow never produce any actual oversight.

Codifying Vibes into Epistemic Hollowness

The institutional codification of vague concerns has a deeper consequence: we risk training people to move without asking what they're moving toward. When action precedes clarity, we cultivate a professional class that excels at implementation but struggles with interrogation.

We produce experts in how rather than why—technicians of ethics, not thinkers of it. Like baristas who can make perfect foam art, but burn the latte.

Consider what this means for future practitioners. They learn to navigate institutional responses without developing the capacity to articulate what specific anxieties we're responding to. They become fluent in frameworks without fluency in the questions that should precede frameworks. It's like learning to perfectly operate a fire extinguisher without being taught to identify what's burning—procedural competence without contextual understanding. Here's your ethics certification; please don't ask what ethics is.

The result is a generation of professionals with remarkable clarity about how to fill out the ethics compliance form, but profound confusion about what makes something ethical in the first place. They can check all the boxes without knowing why the boxes exist—the bureaucratic equivalent of people who can recite their zodiac sign but not locate it in the night sky.

The Psychology of Binary Thinking

Why We Pick Sides

To understand how simplified statements like "AI is bad" gain traction, we need to examine why humans are neurologically predisposed to binary thinking. Curiously, the statement would have been equally puzzling had she declared "AI is good" and left it at that. Either way, the binary framing skips the crucial work of articulation.

Our brains evolved in environments where rapid categorization meant survival. The neural circuitry that helped our ancestors make split-second judgments still shapes how we process complex modern phenomena. We're running cognitive software optimized for sabertooth tiger detection to evaluate machine learning algorithms. The results are about what you'd expect—like trying to open a PDF with a can opener.

Cognitive scientists call this "dichotomous thinking"—our default mode of sorting complex realities into binary oppositions. It's not a bug; it's a feature that helped navigate ancestral environments where nuance was a luxury survival couldn't afford. Our ancestors didn't have time for "Well, technically, that rustle in the bushes could be several things..." Those who paused for nuanced assessment became lunch.

It's worth questioning whether negative declarations about AI actually outnumber positive ones. For every doomsayer predicting robot apocalypse, there's a techno-optimist promising utopia. The reality is more complicated—both simplified positive and negative framings thrive in our discourse, often with equal lack of articulation. We've created a binary discourse where the only positions seem to be "AI will kill us all" or "AI will save us all," with the less marketable middle ground of "It depends on what we build and how we govern it" struggling to find air time.

When we do gravitate toward negative framings, psychologists suggest it may be due to "negativity bias"—our tendency to notice what might harm us before we notice what might help us. Our ancestors who worried too much about rustling bushes lived longer than those who didn't worry enough. The paranoid survived; the optimists became lunch. Evolution selected for anxiety, not accurate threat assessment.

Daniel Kahneman's dual-process theory helps explain this mechanism. Our minds operate through two distinct systems:

System 1: Fast, automatic, intuitive, emotional—the part of your brain that sees a photo of Mark Zuckerberg and immediately thinks "lizard person" System 2: Slow, deliberate, analytical, requiring conscious effort—the part that remembers that lizard people aren't real, but only after System 1 has already sent the conspiracy theory to your group chat

When someone encounters a statement like "AI is bad," their System 1 often takes over, activating several cognitive patterns:

Tribal signaling: Taking sides serves as a social identity marker. Declaring "AI is bad" signals membership in particular intellectual tribes. These declarations function less as epistemic claims and more as membership badges—technological festival wristbands indicating which afterparties you're likely to attend. It's the conversational equivalent of wearing a band t-shirt at a concert.

Cognitive ease: Simple statements feel truer than complex ones. The phrase "AI is bad" requires minimal cognitive effort to process, creating a deceptive sense of clarity. Our brains confuse easy processing with truth—the psychological equivalent of mistaking fast food for fine dining because it arrived quickly.

Affect heuristic: Emotional responses happen faster than analytical ones. Words like "bad" trigger immediate emotional responses that precede analysis. Your brain has already filed the concept under "threats" while still trying to figure out what it's supposed to be afraid of. It's like your fire alarm going off before checking if someone's just making toast—safety first, accuracy optional.

This tendency toward binary thinking is reinforced by our media ecosystem. Social platforms reward strong, simplified positions rather than nuanced analysis—their algorithms value engagement over accuracy. The result is what media theorist Neil Postman called "context-free information"—facts stripped of the complex systems that give them meaning, floating freely for us to sort into preexisting bins labeled "good" or "bad." We've optimized our information systems for hot takes, not hot thought.

What's particularly interesting about AI as a subject of moral judgment is how effectively it functions as a "moral inkblot"—an ambiguous stimulus onto which we project preexisting values and concerns. When someone says "AI is bad," they might be thinking about job displacement, algorithmic bias, existential risk, privacy violations, or creative displacement—but the statement itself doesn't distinguish between these vastly different concerns. It's a testament to the term's remarkable ambiguity that both socialists and libertarians can both say "AI is bad" and mean entirely different things.

The Cost of Skipping the Question

What gets lost when we leap to action before understanding? What might we recover if we paused?

Perhaps most importantly, the leap to action devalues the process of understanding. The space for questions shrinks as answers become codified. The messy, uncertain work of grappling with complex sociotechnical systems gives way to the comforting certainty of curricula and credentials. We trade the anxiety of not knowing for the comfort of pretending to know—epistemic comfort food that satisfies without nourishing.

We've managed to industrialize consideration with remarkable efficiency—mass-producing courses, certificates, and centers faster than we can articulate what ethics might actually require of us. We now have ethical oversight at institutional speed but intellectual clarity at human speed—and the gap between them grows by the semester. The bureaucracy of ethics advances while the substance of ethics struggles to keep pace.

Finding Space to Think

What might an alternative approach look like? How might we respond to legitimate concerns about AI without skipping the crucial work of articulation?

What philosopher Hans-Georg Gadamer called a "fusion of horizons" seems relevant here—the meeting of different perspectives that expands understanding. This means bringing together technical, social, historical, and philosophical perspectives not in token interdisciplinary gestures but in sustained dialogue. Understanding complex systems requires more voices, not just more credentials. Our current approach to interdisciplinarity resembles a dinner party where everyone brought appetizers and is now wondering why there's no main course—lots of interesting small bites, zero nutritional coherence.

Institutional responses aren't always the most appropriate reactions to emerging concerns. Sometimes the right response to uncertainty is patience – the willingness to sit with questions before rushing to answers. This patience is the active work of thinking that precedes meaningful action. It's the difference between reaction and response, between motion and direction—like the pause before you speak rather than the words you regret.

We've confused accreditation with comprehension, urgency with clarity, and noise with depth. No wonder we keep reacting faster than we can name the problem. We're constructing elaborate infrastructure on foundations of conceptual quicksand.

It might not generate as many headlines or fill as many course catalogs to pause and demand better articulation first. But consensus becomes a lot easier when we have a shared understanding.

Comments are open.